AnimateLCM: Computation-Efficient Personalized Style Video Generation without Personalized Video Data

Some of the best animations generated with AnimateLCM in 4 steps!

Some of the best animations generated with AnimateLCM in 4 steps!

Abstract

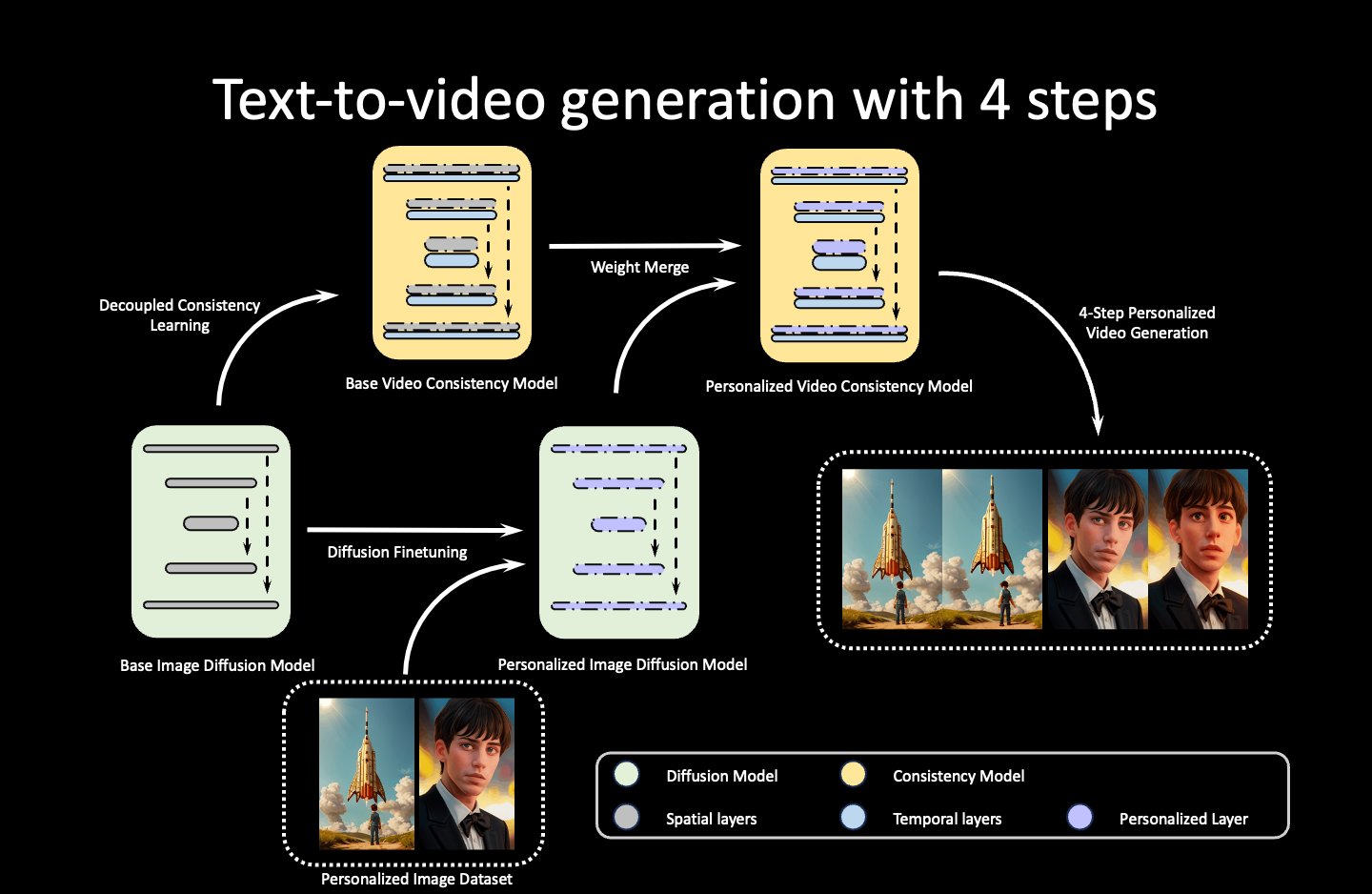

The video diffusion model has been gaining increasing attention for its ability to produce videos that are both coherent and of high fidelity. However, the iterative denoising process makes it computationally intensive and time-consuming, thus limiting its applications. Inspired by the Consistency Model~(CM) that distills pretrained image diffusion models to accelerate the sampling with minimal steps and its successful extension Latent Consistency Model~(LCM) on conditional image generation, we propose AnimateLCM, allowing for high-fidelity video generation within minimal steps. Instead of directly conducting consistency learning on the raw video dataset, we propose a decoupled consistency learning strategy that decouples the distillation of image generation priors and motion generation priors, which improves the training efficiency and enhances the generation visual quality. Additionally, to enable the combination of plug-and-play adapters in the stable diffusion community to achieve various functions~(e.g., ControlNet for controllable generation), we propose an efficient strategy to adapt existing adapters to our distilled text-conditioned video consistency model or train adapters from scratch without harming the sampling speed. We validate the proposed strategy in image-conditioned video generation and layout-conditioned video generation, all achieving top-performing results.

Side-By-Side Comparison

Animations generated by AnimateLCM and alternative methods in 4 steps. AnimateLCM is significantly better.

Extension for efficient video-to-video stylization

The base length of AnimateLCM is 16 (2 seconds), which is in line with most mainstream video generation models. AnimateLCM can be applied for longer video generation or video-to-video stylization in a zero-shot manner . The quality degrades slightly since it is never really trained on such long videos.Prompt: "Green alien, red eyes." You can find the source video from X.

Extension for efficient longer video generation

The base length of AnimateLCM is 16 (2 seconds), which is in line with most mainstream video generation models. AnimateLCM can be applied for longer video generation or video-to-video stylization in a zero-shot manner . The quality degrades slightly since it is never really trained on such long videos.Click to Play the Animations.

The following videos are 4 times longer than the base length.Gallery Ⅰ: Standard Generation: Realistic

Here we demonstrate animations generated by AnimateLCM with Minimal Steps .

Click to Play the Animations.

Gallery Ⅱ: Standard Generation: Anime

Here we demonstrate animations generated by AnimateLCM with Minimal Steps .

Click to Play the Animations.

Gallery Ⅲ: Standard Generation: Cartoon 3D

Here we demonstrate animations generated by AnimateLCM with Minimal Steps .

Click to Play the Animations.

Gallery Ⅳ: Image-to-Video Generation

Here we demonstrate Image-to-Video generation results of AnimateLCM with Minimal Steps

Click to Play the Animations.

Find the source images in Realistic.

Find the source images in RCNZ.

Find the source images in Lyriel.

Find the source images in ToonYou.

Gallery Ⅴ: Controllable Video Generation

Here we demonstrate controllable video generation results of AnimateLCM with Minimal Steps .

Click to Play the Animations.

Ablation: Number of Function Evaluations (NFE)

Here we demonstrate video generation results of AnimateLCM with different inference steps.